🕑Estimated time for completion

This section takes about 40 minutes to complete.

Data Milky Way: Brief History (Part 3) - Data Processing

Evolution of Data Processing

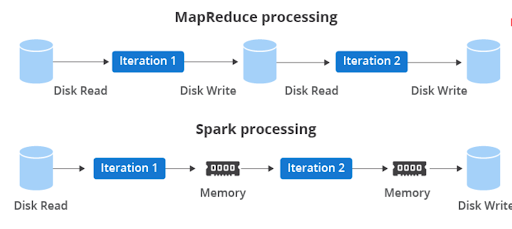

- Recap: Why move from Hadoop to Spark + Object Storage (~20 minute read)

- Bonus Content, not necessary for the progression in this course:

- Intro to Metastores and Data Catalogs (~10 minutes)

- Batch and Micro-Batch Streaming (~20 minutes)

- Continuous Processing (~10 minutes)

- One syntax to rule them all? (~2 minutes)

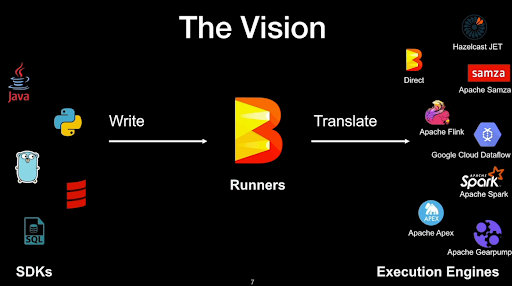

- Apache Beam is based on the Dataflow model introduced by Google (scientific paper, will take a while to digest)

- Aims to unify the semantics of batch & streaming processing across engines (Flink, Spark, etc.)

- You don’t necessarily need streaming, let alone Beam! Many teams simply choose either Spark Structured Streaming or Flink without Beam.

Evaluate your own project’s needs. We will cover streaming in the second half of the course, so more material will be provided then.

Orchestration Core Concepts

But how do we make our pipeline flow? 🌊

- Data Engineering workflows often involve transforming and transferring data from one place to another.

- We want to combine data from different locations, and we want to do this in a way that is reproducible and scalable when there are updates to the data or to our workflows.

- Workflows in real-life have multiple steps and stages. We want to be able to orchestrate these steps and stages and monitor the progress of our workflows.

- Sometimes, everything might work fine with just CRON jobs.

- But other times, you might want to control the state transitions of these steps:

- e.g. if Step A doesn’t run properly, don’t run Step B because the data could be corrupt, instead run Step C.

- Once again, the concept of Directed Acyclic Graphs (DAGs) can come to our rescue.

- Bonus Content: Apache Airflow (32 minute video) is one nice way of setting up DAGs to orchestrate jobs 🌈

- Note: Airflow is primarily designed as a task orchestration tool.

- You can trigger tasks on the Airflow cluster itself or on remote targets (e.g. AWS Fargate, Databricks, etc.).

- NOT designed for transferring large amounts of actual data.

- Reference Documentation

- Play around with Airflow locally (very optional!)

Practical Data Workloads

We’re here to teach you big data skills, but in reality...

Single-Node vs. Cluster

Not everything is Big Data! You don’t always need Spark! Sometimes Pandas deployed on a single node function/container is just fine!.

Batch vs Streaming

Streaming isn’t always the solution! (Optional reading, ~10 minutes)

Orchestration options

Here, it's just useful to know a few of the names but going into detail is not necessary for the course.

DAG-based approaches:

Event-Driven + Declarative

Other triggers: